Microsoft and OpenAI Sound Alarm Over AI Hacking

A recent joint study by Microsoft and OpenAI raises concerns as it unveils attempts by state-backed hacker groups to exploit AI tools like ChatGPT. The research identifies actors linked to Russia, North Korea, Iran, and China utilizing ChatGPT for nefarious purposes. These include gathering intelligence on targets, refining attack scripts, and crafting sophisticated social engineering tactics.

This revelation highlights the evolving landscape of cybercrime, where attackers are embracing new technologies to enhance their capabilities. While no major attacks utilizing large language models have been reported yet, the potential risk demands vigilance.

Cybercriminals Explore AI’s Potential

Freepik | DC Studio | Cybercriminals and other adversaries are testing new AI technologies for their operations.

Microsoft stated in a blog post, “Cybercrime syndicates, nation-state threat actors, and other adversaries are actively delving into newly emerging AI technologies, aiming to grasp their potential utility for their operations and the security measures they might need to evade.”

The hacking group Strontium, suspected to be affiliated with Russian military intelligence, has reportedly been leveraging AI models to glean insights from satellite communications, radar imagery, and technical parameters. This group, also known as APT28 or Fancy Bear, has been active during the recent Russia-Ukraine conflict and gained notoriety for targeting Hillary Clinton’s 2016 presidential campaign.

Microsoft further revealed that Strontium is also employing AI for seemingly mundane tasks like file manipulation, data selection, leveraging regular expressions, and utilizing multiprocessing capabilities. This suggests an attempt to automate and optimize their technical operations.

Target Research and Phishing Content

A North Korean hacking group called Thallium has used AI models to research publicly disclosed vulnerabilities and target organizations. They have also used AI for basic scripting and drafting phishing campaign content.

Instagram | our.today | Microsoft and OpenAI haven’t found any major AI-driven attacks.

Microsoft stated the Iranian group Curium also utilized AI to generate phishing emails and code for bypassing antivirus apps. Chinese state-affiliated hackers are similarly using AI for research, scripting, translations, and refining existing tools.

No Major Attacks Yet

Microsoft and OpenAI have not detected any significant attacks leveraging AI so far. However, they have been shutting down all accounts and assets linked to these hacking groups.

“We believe it’s crucial to publish this research, unveiling the initial, gradual maneuvers made by recognizable threat actors. By doing so, we aim to provide insight into our efforts to thwart and counter these actions alongside the defender community,” Microsoft stated.

Future AI Attack Concerns

While current AI use in cyber attacks appears limited, Microsoft warned of future risks like voice impersonation. “AI-driven fraud poses a significant worry. Take voice synthesis as a prime instance, where a mere three-second voice snippet can educate a model to mimic anyone’s voice convincingly,” they explained. As AI capabilities continue to evolve, it’s imperative to stay vigilant and proactive in addressing emerging threats to safeguard digital integrity and trust.

Pexels | Salvatore De Lellis | Microsoft employs AI defenses to counter AI-enabled attacks.

Microsoft’s AI Defense

To respond to AI-enabled attacks, Microsoft is utilizing AI defenses. “Artificial intelligence empowers attackers to elevate the sophistication of their assaults, leveraging ample resources to invest in its enhancement,” said Homa Hayatyfar, principal detection analytics manager at Microsoft. “This trend is evident among the 300+ threat actors monitored by Microsoft, and we leverage AI to safeguard, identify, and react accordingly.”

Microsoft is crafting a Security Copilot, an AI assistant designed to aid cybersecurity professionals in pinpointing breaches and comprehending the vast volume of daily security data. Following significant Azure cloud breaches and instances of Russian hackers surveilling executives, Microsoft is also revamping software security protocols.

With these proactive measures, Microsoft aims to fortify defenses against evolving cyber threats, ensuring greater resilience in the digital landscape for individuals and organizations alike.

More in Tech

-

`

5 Savings Accounts That Will Earn You the Most Money in 2024

In 2024, choosing the right savings account is more critical than ever. With the array of options available, knowing which savings...

June 5, 2024 -

`

The Complete Relationship Timeline of Taylor Swift & Travis Kelce

When you think of unlikely couples, Taylor Swift and Travis Kelce might not be the first pair that comes to mind....

May 29, 2024 -

`

What is Business Administration and What Opportunities Does it Offer?

In today’s bustling world of commerce and industry, the term “business administration” often looms large, yet its true essence remains shrouded...

May 22, 2024 -

`

What is AI? Exploring the World of Artificial Intelligence

In today’s rapidly evolving technological landscape, the term “Artificial Intelligence” (AI) has become a buzzword that sparks curiosity, speculation, and even...

May 16, 2024 -

`

How Many Jobs Are Available in Real Estate Investment Trusts? Exploring Career Opportunities

Are you seeking a career path with a blend of financial savvy and a knack for the real estate market? Look...

May 9, 2024 -

`

The Staggering Net Worth of the Richest Podcaster Joe Rogan in 2024

Joe Rogan has become a household name, largely due to his immensely popular podcast, “The Joe Rogan Experience.” With a blend...

April 29, 2024 -

`

What Are Routing Numbers & Do Credit Cards Have One?

When managing your finances, understanding the various numbers and terms associated with your bank accounts and credit cards is crucial. A...

April 24, 2024 -

`

Tesla Stock: Let’s Address the Elephant in the Room

Why is Tesla stock dropping? This is the million-dollar question that has been on the minds of investors and enthusiasts alike...

April 16, 2024 -

`

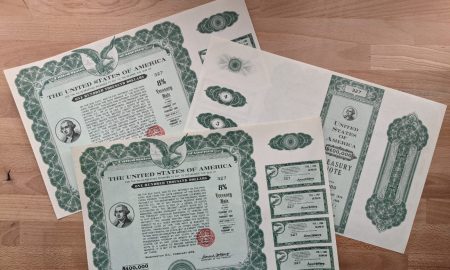

Bearer Bonds: Definition, How They Work & Are They Valuable?

Bearer bonds are unique securities that have a colorful history and a distinctive way of functioning that sets them apart from...

April 10, 2024

You must be logged in to post a comment Login